YOLOv8 Implementation on British Sign Language System with Edge Detection Extraction

DOI:

https://doi.org/10.56705/ijodas.v6i2.276Keywords:

Yolov8, British Sign Language (BSL), Hand Gesture Detection, Deep Learning, Segmentation ModelAbstract

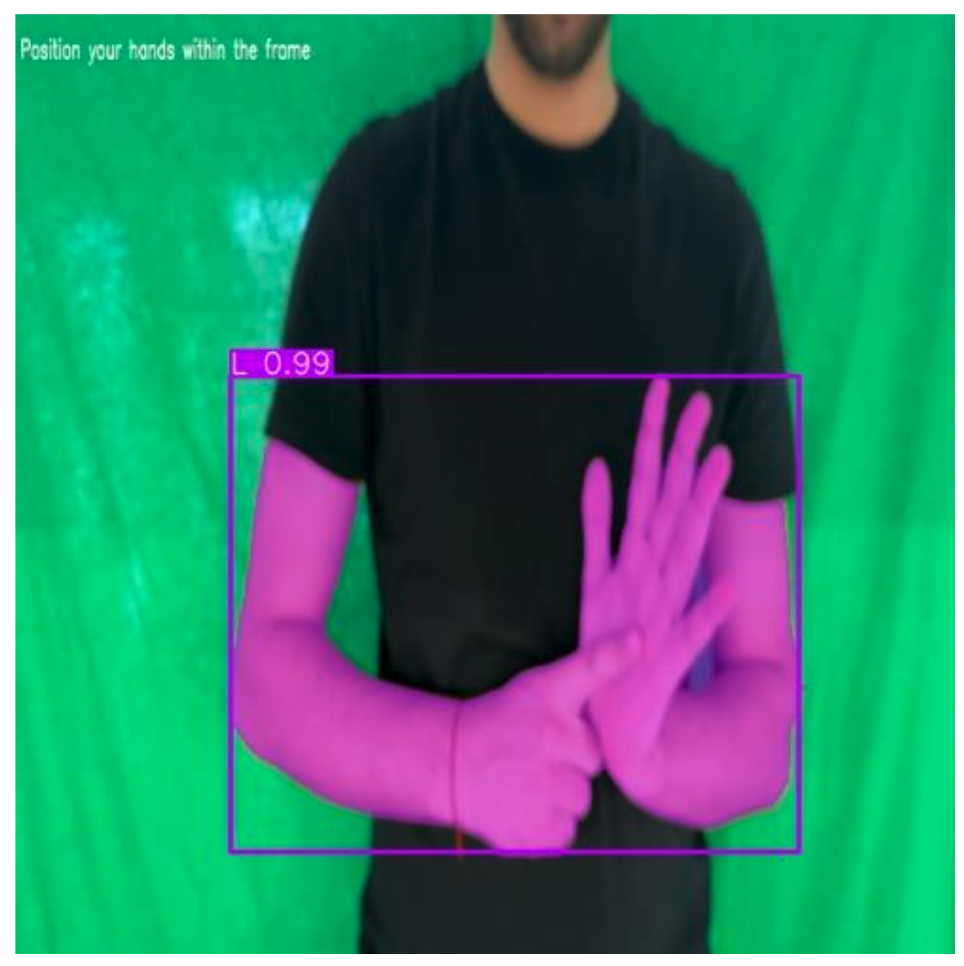

This study presents the development and implementation of a deep learning-based system for recognizing static hand gestures in British Sign Language (BSL). The system utilizes the YOLOv8 model in conjunction with edge detection extraction techniques. The objective of this study is to enhance the accuracy of recognition and facilitate communication for individuals with hearing impairments. The dataset was obtained from Kaggle and comprises images of various BSL hand signs captured against a uniform green background under consistent lighting conditions. The preprocessing steps entailed resizing the images to 640 640 pixels, implementing pixel normalization, filtering out low-quality images, and employing data augmentation techniques such as horizontal flipping, rotation, shear, and brightness adjustments to enhance robustness. Edge detection was implemented to accentuate the contours of the hand, thereby facilitating more precise gesture identification. Manual annotation was performed to generate both bounding boxes and segmentation masks, allowing for the training of two model variants: The first is YOLOv8 (non-segmentation), and the second is YOLOv8-seg (segmentation). Both models underwent training over a period of 100 epochs, employing the Adam optimizer and binary cross-entropy loss. The training-to-testing data splits utilized were 50:50, 60:40, 70:30, and 80:20. The evaluation metrics employed included mAP@50, precision, recall, and F1-score. The YOLOv8-seg model with an 80:20 split demonstrated the optimal performance, exhibiting a precision of 0.974, a recall of 0.968, and mAP@50 of 0.979. These metrics signify the model's capacity for robust detection and localization. Despite requiring greater computational resources, the segmentation model offers enhanced contour recognition, rendering it well-suited for high-precision applications. However, the generalizability of the model is constrained due to the employment of static gestures and controlled backgrounds. In the future, researchers should consider incorporating dynamic gestures, varied backgrounds, and uncontrolled lighting to enhance real-world performance.

Downloads

References

C. Arya, A. Gusain, Kunal, M. Diwakar, I. Gupta, and N. K. Pandey, “A Lightweight Solution for Real-Time Indian Sign Language Recognition,” in 2024 International Conference on Artificial Intelligence and Emerging Technology (Global AI Summit), IEEE, Sep. 2024, pp. 1359–1364. doi: 10.1109/GlobalAISummit62156.2024.10947786.

A. N. Handayani, M. I. Akbar, H. Ar-Rosyid, M. Ilham, R. A. Asmara, and O. Fukuda, “Design of SIBI Sign Language Recognition Using Artificial Neural Network Backpropagation,” in 2022 2nd International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), IEEE, Dec. 2022, pp. 192–197. doi: 10.1109/ICICyTA57421.2022.10038205.

J. J. Bird, A. Ekárt, and D. R. Faria, “British Sign Language Recognition via Late Fusion of Computer Vision and Leap Motion with Transfer Learning to American Sign Language,” Sensors, vol. 20, no. 18, p. 5151, Sep. 2020, doi: 10.3390/s20185151.

Y. Zhang and X. Jiang, “Recent Advances on Deep Learning for Sign Language Recognition,” Computer Modeling in Engineering & Sciences, vol. 139, no. 3, pp. 2399–2450, 2024, doi: 10.32604/cmes.2023.045731.

B. Alsharif, E. Alalwany, A. Ibrahim, I. Mahgoub, and M. Ilyas, “Real-Time American Sign Language Interpretation Using Deep Learning and Keypoint Tracking,” Sensors, vol. 25, no. 7, p. 2138, Mar. 2025, doi: 10.3390/s25072138.

M. M. Alnfiai, “Deep Learning-Based Sign Language Recognition for Hearing and Speaking Impaired People,” Intelligent Automation & Soft Computing, vol. 36, no. 2, pp. 1653–1669, 2023, doi: 10.32604/iasc.2023.033577.

M. D. Montefalcon, J. R. Padilla, and R. Rodriguez, “Filipino Sign Language Recognition Using Long Short-Term Memory and Residual Network Architecture,” 2023, pp. 489–497. doi: 10.1007/978-981-19-2397-5_45.

I. Tupal, M. Cabatuan, and M. Manguerra, “Recognizing Filipino Sign Language with InceptionV3, LSTM, and GRU,” in 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), IEEE, Dec. 2022, pp. 1–5. doi: 10.1109/HNICEM57413.2022.10109284.

C. B. Jetomo and M. L. D. De Lara, “Filipino sign language alphabet recognition using Persistent Homology Classification algorithm,” PeerJ Comput Sci, vol. 11, p. e2720, Feb. 2025, doi: 10.7717/peerj-cs.2720.

A. N. Handayani, T. Andriyanto, D. F. Azizah, M. Z. Wiryawan, and H. A. Rosyid, “Comparison of ResNet-50 and EfficientNet-B0 Method for Classification of Indonesian Sign Language System (SIBI) Using Multi Background Dataset,” in 2024 International Conference on Computer Engineering, Network, and Intelligent Multimedia (CENIM), IEEE, Nov. 2024, pp. 1–6. doi: 10.1109/CENIM64038.2024.10882836.

H. A. AbdElghfar et al., “QSLRS-CNN: Qur’anic sign language recognition system based on convolutional neural networks,” The Imaging Science Journal, vol. 72, no. 2, pp. 254–266, Feb. 2024, doi: 10.1080/13682199.2023.2202576.

B. Alsharif, E. Alalwany, and M. Ilyas, “Transfer learning with YOLOV8 for real-time recognition system of American Sign Language Alphabet,” Franklin Open, vol. 8, p. 100165, Sep. 2024, doi: 10.1016/j.fraope.2024.100165.

T.-H. Nguyen, B.-V. Ngo, and T.-N. Nguyen, “Vision-Based Hand Gesture Recognition Using a YOLOv8n Model for the Navigation of a Smart Wheelchair,” Electronics (Basel), vol. 14, no. 4, p. 734, Feb. 2025, doi: 10.3390/electronics14040734.

A. S. G. Raharjo and E. Sugiharti, “Alphabet Classification of Sign System Using Convolutional Neural Network with Contrast Limited Adaptive Histogram Equalization and Canny Edge Detection,” Scientific Journal of Informatics, vol. 10, no. 3, pp. 239–250, Jun. 2023, doi: 10.15294/sji.v10i3.44137.

M. Mahyoub, F. Natalia, S. Sudirman, and J. Mustafina, “Sign Language Recognition using Deep Learning,” in 2023 15th International Conference on Developments in eSystems Engineering (DeSE), IEEE, Jan. 2023, pp. 184–189. doi: 10.1109/DeSE58274.2023.10100055.

Y. Wang, Z. Hao, X. Dang, Z. Zhang, and M. Li, “UltrasonicGS: A Highly Robust Gesture and Sign Language Recognition Method Based on Ultrasonic Signals,” Sensors, vol. 23, no. 4, p. 1790, Feb. 2023, doi: 10.3390/s23041790.

C. Shorten and T. M. Khoshgoftaar, “A survey on Image Data Augmentation for Deep Learning,” J Big Data, vol. 6, no. 1, p. 60, Dec. 2019, doi: 10.1186/s40537-019-0197-0.

B.-A. Awaluddin, C.-T. Chao, and J.-S. Chiou, “A Hybrid Image Augmentation Technique for User- and Environment-Independent Hand Gesture Recognition Based on Deep Learning,” Mathematics, vol. 12, no. 9, p. 1393, May 2024, doi: 10.3390/math12091393.

R. B. Shamshanovna et al., “Combined-adaptive image preprocessing method based on noise detection,” International Journal of Electrical and Computer Engineering (IJECE), vol. 15, no. 2, p. 1584, Apr. 2025, doi: 10.11591/ijece.v15i2.pp1584-1592.

M. Arulmozhi, N. G. Iyer, and C. Amutha, “Edge Detection-Based Medibot and Adoption of Deep Learning for Hand Gesture Recognition,” 2023, pp. 275–283. doi: 10.1007/978-981-99-2349-6_25.

S. T. Abd Al-Latief, S. Yussof, A. Ahmad, and S. Khadim, “Deep Learning for Sign Language Recognition: A Comparative Review,” Journal of Smart Internet of Things, vol. 2024, no. 1, pp. 77–116, Jun. 2024, doi: 10.2478/jsiot-2024-0006.

N. Adaloglou et al., “A Comprehensive Study on Deep Learning-Based Methods for Sign Language Recognition,” IEEE Trans Multimedia, vol. 24, pp. 1750–1762, 2022, doi: 10.1109/TMM.2021.3070438.

S. Sharma and S. Singh, “Vision-based hand gesture recognition using deep learning for the interpretation of sign language,” Expert Syst Appl, vol. 182, p. 115657, Nov. 2021, doi: 10.1016/j.eswa.2021.115657.

A. Garcia-Garcia, S. Orts-Escolano, S. Oprea, V. Villena-Martinez, and J. Garcia-Rodriguez, “A Review on Deep Learning Techniques Applied to Semantic Segmentation,” Apr. 2017.

L. Alzubaidi et al., “Review of deep learning: concepts, CNN architectures, challenges, applications, future directions,” J Big Data, vol. 8, no. 1, p. 53, Mar. 2021, doi: 10.1186/s40537-021-00444-8.

M. Xu, S. Yoon, A. Fuentes, and D. S. Park, “A Comprehensive Survey of Image Augmentation Techniques for Deep Learning,” Pattern Recognit, vol. 137, p. 109347, May 2023, doi: 10.1016/j.patcog.2023.109347.

M. Xu, S. Yoon, A. Fuentes, and D. S. Park, “A Comprehensive Survey of Image Augmentation Techniques for Deep Learning,” Pattern Recognit, vol. 137, p. 109347, May 2023, doi: 10.1016/j.patcog.2023.109347.

Q. H. Nguyen et al., “Influence of Data Splitting on Performance of Machine Learning Models in Prediction of Shear Strength of Soil,” Math Probl Eng, vol. 2021, pp. 1–15, Feb. 2021, doi: 10.1155/2021/4832864.

A. Nazarkar, H. Kuchulakanti, C. S. Paidimarry, and S. Kulkarni, “Impact of Various Data Splitting Ratios on the Performance of Machine Learning Models in the Classification of Lung Cancer,” 2023, pp. 96–104. doi: 10.2991/978-94-6463-252-1_12.

R. Padilla, W. L. Passos, T. L. B. Dias, S. L. Netto, and E. A. B. da Silva, “A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit,” Electronics (Basel), vol. 10, no. 3, p. 279, Jan. 2021, doi: 10.3390/electronics10030279.

T.-C. Lin, H.-H. Chen, K.-S. Chen, Y.-P. Chen, and S.-H. Chang, “Decision-Making Model of Performance Evaluation Matrix Based on Upper Confidence Limits,” Mathematics, vol. 11, no. 16, p. 3499, Aug. 2023, doi: 10.3390/math11163499.

M. S. Sarowar, N. E. J. Farjana, Md. A. I. Khan, M. A. Mutalib, S. Islam, and M. Islam, “Hand Gesture Recognition Systems: A Review of Methods, Datasets, and Emerging Trends,” Int J Comput Appl, vol. 187, no. 2, pp. 1–33, May 2025, doi: 10.5120/ijca2025924776.

A. F. AlShammari, “Implementation of Model Evaluation Using Confusion Matrix in Python,” Int J Comput Appl, vol. 186, no. 50, pp. 42–48, Nov. 2024, doi: 10.5120/ijca2024924236.

Downloads

Published

Issue

Section

License

Authors retain copyright and full publishing rights to their articles. Upon acceptance, authors grant Indonesian Journal of Data and Science a non-exclusive license to publish the work and to identify itself as the original publisher.

Self-archiving. Authors may deposit the submitted version, accepted manuscript, and version of record in institutional or subject repositories, with citation to the published article and a link to the version of record on the journal website.

Commercial permissions. Uses intended for commercial advantage or monetary compensation are not permitted under CC BY-NC 4.0. For permissions, contact the editorial office at ijodas.journal@gmail.com.

Legacy notice. Some earlier PDFs may display “Copyright © [Journal Name]” or only a CC BY-NC logo without the full license text. To ensure clarity, the authors maintain copyright, and all articles are distributed under CC BY-NC 4.0. Where any discrepancy exists, this policy and the article landing-page license statement prevail.